Even before artificial intelligence was first invented, we’ve had people predicting that it would be the downfall of humanity. Now that AI is here and increasingly a part of our everyday lives, warnings over the dangers of AI grows louder and, frankly, more terrifying by the day.

Just take the words of Dr Geoffrey Hinton, the ‘godfather of AI’, who told an MIT Technology Review conference last week that there is an “existential threat” of AI “taking control.”

“I used to think it was a long way off, but I now think it’s serious and fairly close,” Hinton said.

The pioneering researcher who was co-awarded the Turing Prize in 2018 for his development of deep-learning neural network models, quit Google last week, where he has worked for the past decade, in order to speak out about the dangers of AI.

Speaking to the BBC, the British-Canadian psychologist and computer scientist said that AI chatbots could soon overtake the human brain in terms of cognitive processing.

“Right now, what we’re seeing is things like GPT-4 eclipses a person in the amount of general knowledge it has, and it eclipses them by a long way. In terms of reasoning, it’s not as good, but it does already do simple reasoning,” he said.

He believes that current models are reasoning with an equivalent of a human IQ of around 80 or 90 and is concerned about what happens when they reach an IQ of 210. The AI is also, fundamentally, a different beast to anything humans have encountered in the physical world.

“I’ve come to the conclusion that the kind of intelligence we’re developing is very different from the intelligence we have,” Hinton said.

“We’re biological systems, and these are digital systems. And the big difference is that with digital systems, you have many copies of the same set of weights, the same model of the world.

“And all these copies can learn separately but share their knowledge instantly. So it’s as if you had 10,000 people, and whenever one person learnt something, everybody automatically knew it. And that’s how these chatbots can know so much more than any one person.”

Caterpillars extract nutrients which are then converted into butterflies. People have extracted billions of nuggets of understanding and GPT-4 is humanity's butterfly.

— Geoffrey Hinton (@geoffreyhinton) March 14, 2023

So, what is to be done? Well, in March, more than a thousand people in the tech industry penned an open letter imploring everyone to stop developing AI due to concerns over out-of-control systems. Twitter boss Elon Musk was one of the signatories calling for a six-month pause on the training of certain complex AIs. Musk has previously called for AI regulation as far back as 2017.

The letter, published by the Future of Life Institute, said that “recent months have seen AI labs locked in an out-of-control race to develop and deploy ever more powerful digital minds that no one – not even their creators – can understand, predict, or reliably control”.

It called for “new and capable regulatory authorities dedicated to AI” to be created so that guidelines and restrictions can be put in place.

Hinton has described the letter as “silly,” saying that, while it might be “quite sensible” to just stop developing AI, “I think it is completely naïve to think that would happen.”

He has said that while “guardrails” are a good idea, once AI becomes smarter than us, it will be a bit like a two-year-old trying to impose rules on their parents. In addition, unless there is international consensus on the use of AI, similar to what we have with nuclear weapons, one country imposing technology restrictions will simply get out-competed by another who doesn’t restrict its use.

Competition is what Hinton believes has exacerbated the current problem. Google, he argues, has been “very responsible” when it comes to AI. But the recent launch of Bing’s own chatbot, as well as OpenAI’s ChatGPT, is pushing them to deploy technology more and more rapidly that Hinton sees evolving into an arms race.

In the NYT today, Cade Metz implies that I left Google so that I could criticize Google. Actually, I left so that I could talk about the dangers of AI without considering how this impacts Google. Google has acted very responsibly.

— Geoffrey Hinton (@geoffreyhinton) May 1, 2023

Initially, the problem he predicts, which is already proving difficult, is the flooding of the internet with fake images, videos, and text. Soon, Hinton says, people will not be able to tell what is real and what isn’t online, leading to mass confusion and manipulation.

Employment is also another big issue. While Hinton says “drudge work” could soon be replaced, other jobs are also in the firing line. Paralegals, personal assistants, translators, and other people who handle rote tasks are all at risk. Others have pointed out that the medical profession could one day be staffed by robots, as could the legal profession, and, of course, people who make a living writing.

The current Writer’s Guild of America strike is, in part, over the use of AI to replace human scriptwriters. It’s conceivable to think of this profession going the same way as the weavers who smashed up textile machines in the early 1800s.

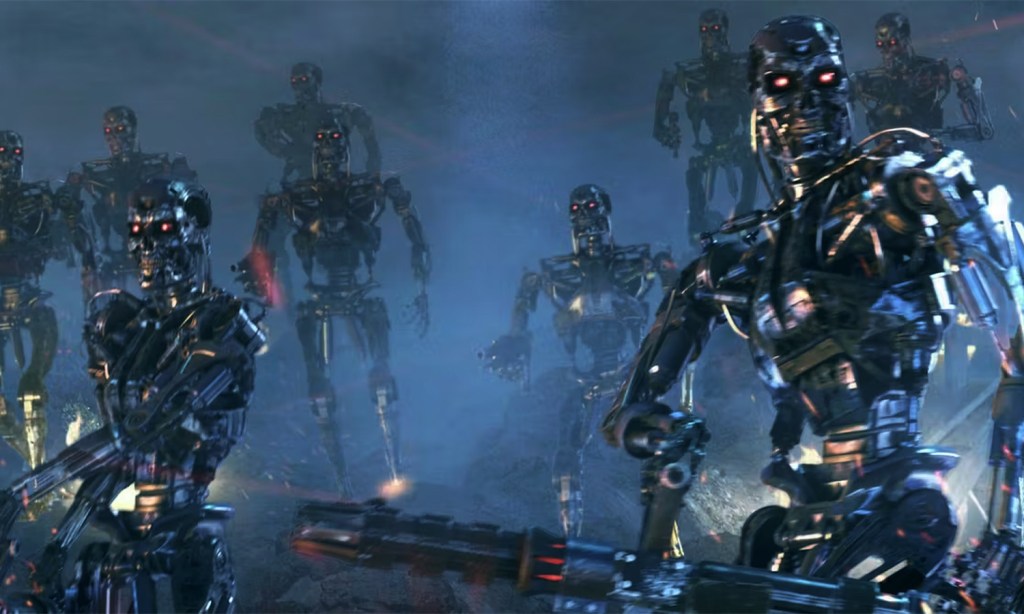

Beyond that, the dangers lie in the use of AI in weapons systems. In 2017, Hinton called for an international ban on military AI weapons, what he calls “killer robots.” No such ban has happened, and there is currently no regulation of AI in any industry as humans are still trying to work out the implications of the technology.

Artificial Intelligence is one of the most powerful tools of our time, but to seize its opportunities, we must first mitigate its risks.

Today, I dropped by a meeting with AI leaders to touch on the importance of innovating responsibly and protecting people's rights and safety. pic.twitter.com/VEJjBrhCTW

— President Biden (@POTUS) May 4, 2023

Many of the smartest minds in the technology game have been warning for years that the day is coming when AI will need regulation. If that’s not possible, then the day is also coming when AI may well take over the world. However, many also argue that the risks, while not impossible, are broadly improbable and rely on a lot of contingencies.

Andrew Ng, Vice-President of the Chinese AI firm Baidu, famously quipped that fears over the dangers of AI now are “like worrying about overpopulation on Mars when we have not even set foot on the planet yet.”

Still, it’s somewhat reassuring that Google, in developing its DeepMind AI, has built the thing with an AI off switch; a “big red button” that can be pushed in the event of an AI takeover. Let’s hope everyone is building their machines with the same level of caution.

Related: I Got ChatGPT to Do My Work and Write This Article For Me

Related: Lensa AI — Another Step Forward in Human Subjugation the Our Robot Overlords

Read more stories from The Latch and subscribe to our email newsletter.